Short reads ⚡️

Snowflake is crushing it, and public investors are clamoring to buy more after its recent quarterly earnings. At a surface level, it’s easy to look at Snowflake and say, “oh, it is just a new data warehouse.” It’s not a false statement, but it misses what’s special about Snowflake. Michael Malis, one of the founders at Freshpaint (an Abstraction portfolio company), does a great job of breaking down why it’s exciting. Freshpaint Blog

There was recently an intriguing back-and-forth between Matt Biilmann (founder of Netlify) and Matt Mullenweg (founder of Automattic, the company behind WordPress) on the merits and tradeoffs between WordPress (a very monolithic approach to building a content-based website/app) and the Jamstack (a very decoupled, composable approach to a content-based website/app). It’s worth reading this Netlify post to grok the ideas behind the two philosophies. There are cases when both make sense, but I would prefer to bet on the flexible/composable approach offered by the Jamstack. Netlify Blog

Long read 🤖

I, too, am tired of reading about GPT-3. That said, this excellent post by Melanie Mitchell puts the model through its paces with a variant of Douglas Hofstadter’s Copycat system to see whether it can make coherent analogies. The results are really fascinating… tl;dr in the quote below (emphasis mine):

The program’s performance was mixed. GPT-3 was not designed to make analogies per se, and it is surprising that it is able to do reasonably well on some of these problems, although in many cases it is not able to generalize well. Moreover, when it does succeed, it does so only after being shown some number of “training examples”. To my mind, this defeats the purpose of analogy-making, which is perhaps the only “zero-shot learning” mechanism in human cognition — that is, you adapt the knowledge you have about one situation to a new situation. You (a human, I assume) do not learn to make analogies by studying examples of analogies; you just make them. All the time.

The potential magic of GPT-3 (or any new tech) isn’t that it’s excellent at everything. It is that “it’s able to do reasonably well” at many, many things. Lowering the barriers to accomplishing work and improving the initial quality are two constant drumbeats in technological innovation.

Zooming out and using GPT-3 as a placeholder for any possibly disruptive technology, it’s a case study in a potentially disruptive step-function improvement. After all, these often look like toys in the beginning.

Graphic I love 🎨

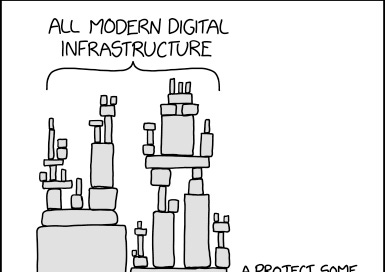

Randall Munroe of XKCD may be the most underrated genius of this generation. His comics and books are delightfully on-point. As much as I believe in the power of open-source and think that the fragmentation in modern software architecture is not inherently bad, it definitely comes with tradeoffs.

As usual, Munroe’s comics have a kernel of truth in them - there was a day a few years ago when a tiny (as in 11 lines of code) javascript package was removed from npm (the go-to JS package manager), and it literally broke a nontrivial portion of the internet.

Wikipedia rabbit hole 📖

Spherical Cows. This is such a beautiful, hilarious, and widely-applicable metaphor. I’m fond of trying to reduce ideas down to their component assumptions because then it’s easier to sort those assumptions into categories like “I believe that,” “I am not sure but could be convinced of that,” and “nope, no way.” If you find a spherical cow embedded in one of those assumptions, it’s time to revisit.

Parting thought 🤔

Back to your Econ 101 class, ceteris paribus is typically translated as “all other things equal,” but maybe “assuming a spherical cow in a vacuum” is a plausible alternative?